For nearly as long as I’ve been using Linux I have had some system on my home network that is acting as a server or test bed for various pieces of software or services. In the beginning this system might be my DHCP and NAT gateway, later it might be a file server but over the years I have almost always had some sort system running that acted as a server of some kind. These systems would often be configured using the same operating system that I was using in the workplace and running similar services where it made sense. This has always given me a way to practice upgrades, service configuration changes and just be as familiar with things as I possibly could.

As I’ve moved along in my career, the services I deal with have gotten more complex and what I want running at home as grown more complex to match. Although my home lab pales in comparison to what others have done I thought it would still be fun to go through what I have running.

Hardware

Like a lot of people, the majority of the hardware I’m running is older hardware that isn’t well suited for daily use. Some of the hardware is stuff I got free, some of it is hardware previously used to run Windows and so on. Unlike what seems to be most home lab enthusiasts, I like to keep things as basic as possible. If a consumer grade device is capable of delivering what I need at home then I will happily stick to that.

On the network side, my home is serviced with cable based Internet. This goes into an ISP provided Arris cable modem and immediately behind this is a Google WiFi access point. Nothing elaborate here, just a “basic” WiFi router handles all DHCP and NAT for my entire network and does a fine job with it. After the WiFi router is a Cisco 3560g 10/100/1000 switch. This sixteen year old managed switch does support a lot of useful features but most of my network is just sitting on VLAN 1 as I don’t have a lot of need for segmenting my network. Attached to the switch are two additional Google WiFi access points, numerous IoT devices, phones, laptops and the like.

Also attached to the switch are, of course, items that I consider part of the home lab. This includes a 2011 HP Compaq 8200 Elite Small Form Factor PC, an Intel i5-3470 based system built around 2012 and a Raspberry Pi 4. The HP system has a number of HDD and SSD drives, 24GB memory, a single gigabit ethernet port and hosts a number of virtual machines. The built Intel i5-3470 system has 16GB memory, a set of three 2TB HDDs and a single SSD for hosting the OS. The Pi4 is a 4GB model with an external SSD attached.

Operating Systems

Base operating system on the HP is Proxmox 7. This excellent operating system is best describe as being similar to VMware ESXi. It allows you to host as many Virtual Machines as your hardware will support, can be clustered and even migrate VMs between cluster nodes. Proxmox is definitely a happy medium between having a single system and being a full on cloud like OpenStack. I can effectively achieve a lot of a cloud stack would provide but with greater simplicity. Although I can create VMs and manually install operating systems, I have created a number of templates to make creating VMs quicker and easier. The code for building the templates is at https://github.com/dustinrue/proxmox-packer.

On the Intel i5-3470 based system is TrueNAS Core. This system acts as a Samba based file store for the entire home network including remote Apple Time Machine support, NFS for Proxmox and iSCSI for Kubernetes. TrueNAS Core is an excellent choice for creating a NAS. Although it is capable of more, I stick just to just the basic file serving functionality and don’t get into any of the extra plugins or services it can provide.

The Raspberry Pi 4 is running the 64bit version of Pi OS. Although it is a beta release it has proven to work well enough.

Software and Services

The Proxmox system hosts a number of virtual machines. These virtual machines provide:

Kubernetes

On top of Proxmox I also run k3s to provide Kubernetes. Kubernetes allows me to run software and test Helm charts that I’m working on. My Kubernetes cluster consists of a single amd64 based VM running on Proxmox and the Pi4 to give me a true arm64 node. In Kubernetes I have installed:

- cert-manager for SSL certifications. This is configured against my DNS provider to validate certificates.

- ingress-nginx for ingress. I do not deploy Traefik on k3s but prefer to use ingress-nginx. I’m more familiar with its configuration and have good luck with it.

- democratic-csi for storage. This package is able to provide on demand storage for pods that ask for it using iSCSI to the TrueNAS system. It is able to automatically create new storage pools and share them using iSCSI.

- gitlab-runner for Gitlab runner. This provides my Gitlab server with the ability to do CI/CD work.

I don’t currently use Kubernetes at home for anything other than short term testing of software and Helm charts. Of everything in my home lab Kubernetes is the most “lab” part of it where I do most of my development of Helm charts and do basic testing of software. Having a Pi4 in the cluster really helps with ensuring charts are targeting operating systems and architectures properly. It also helps me validate that Docker images I am building do work properly across different architectures.

Personal Workstation

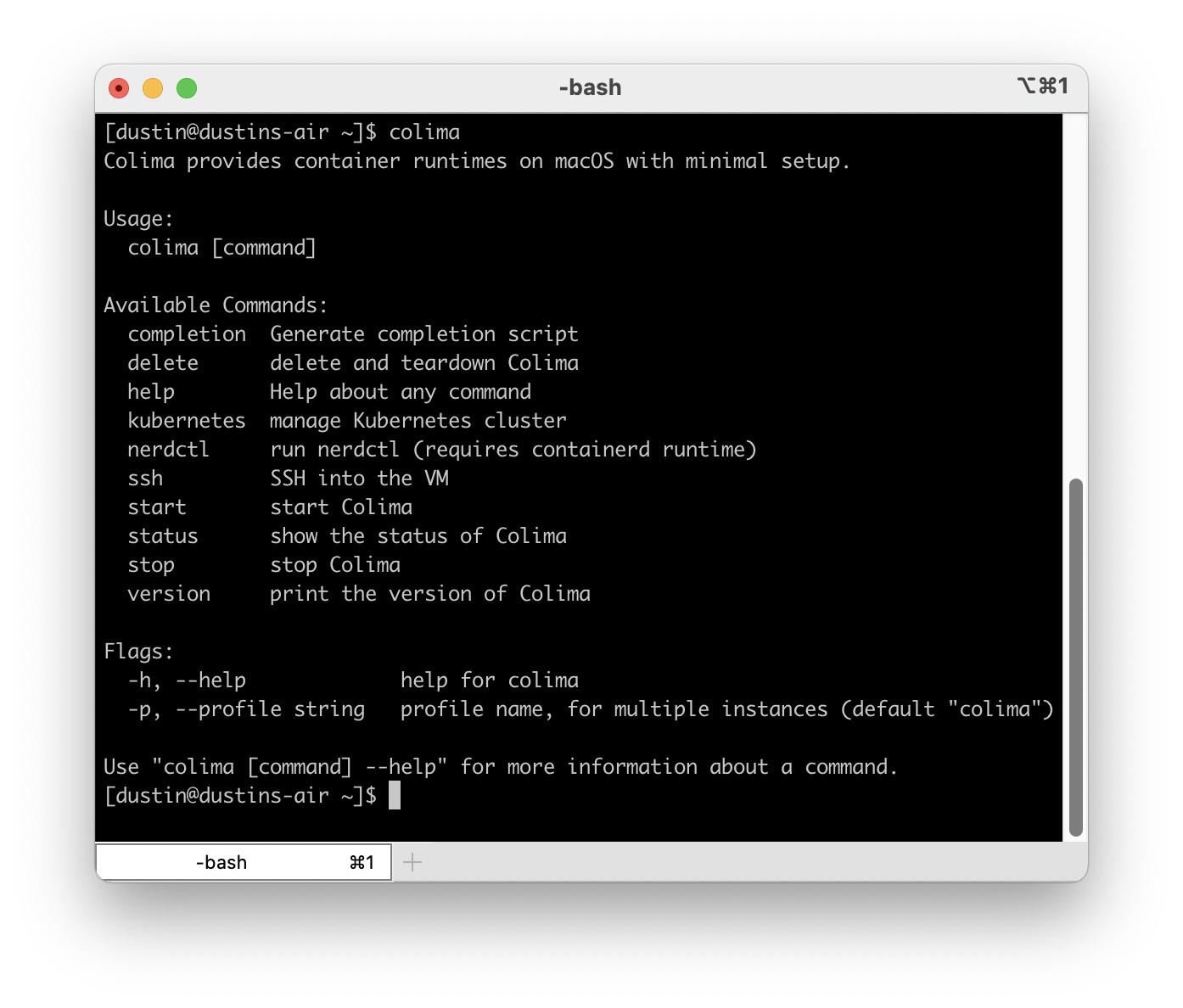

My daily driver is currently an i7 Mac mini. This is, of course, running macOS and includes all of the usual tools and utilities I need. I detailed some time ago the software I use at https://dustinrue.com/2020/03/whats-on-my-computer-march-2020-edition/.

Finishing Up

As you can see, I have a fairly modest home lab setup but it provides me with exactly what I need to provide the services I actually use on a daily basis as well as provide a place to test software and try things out. Although there is a limited set of items I run continuously I can easily use this for testing more advanced setups if I need to.