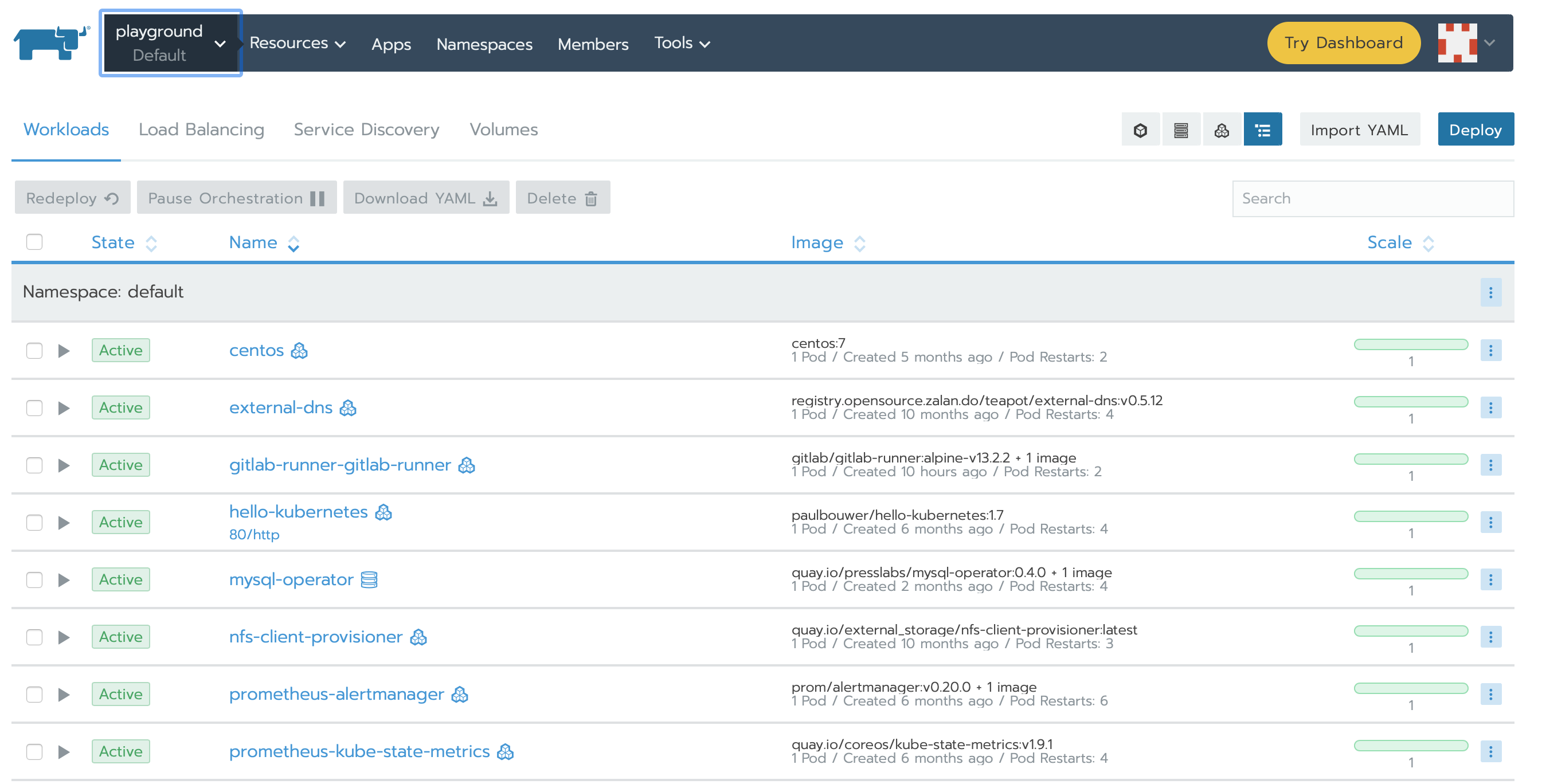

Back in 2008, I bought my second Mac, a unibody MacBook, to give me a more capable and portable system than my existing Mac mini. The mini was a great little introduction to the Mac world but wasn’t portable. The MacBook got used for several years until software got too heavy for it. Rather than getting rid of it, I kept the machine around to run Linux. Eventually, I introduced it as part of my home lab. In my home lab, I use Proxmox as a virtualization system. Proxmox can be set up as a cluster with shared storage so VMs and LXC containers can be migrated between physical hosts as needed. For a while I had Linux installed onto the MacBook and it was part of the Proxmox setup just so I could play around with VM migration.

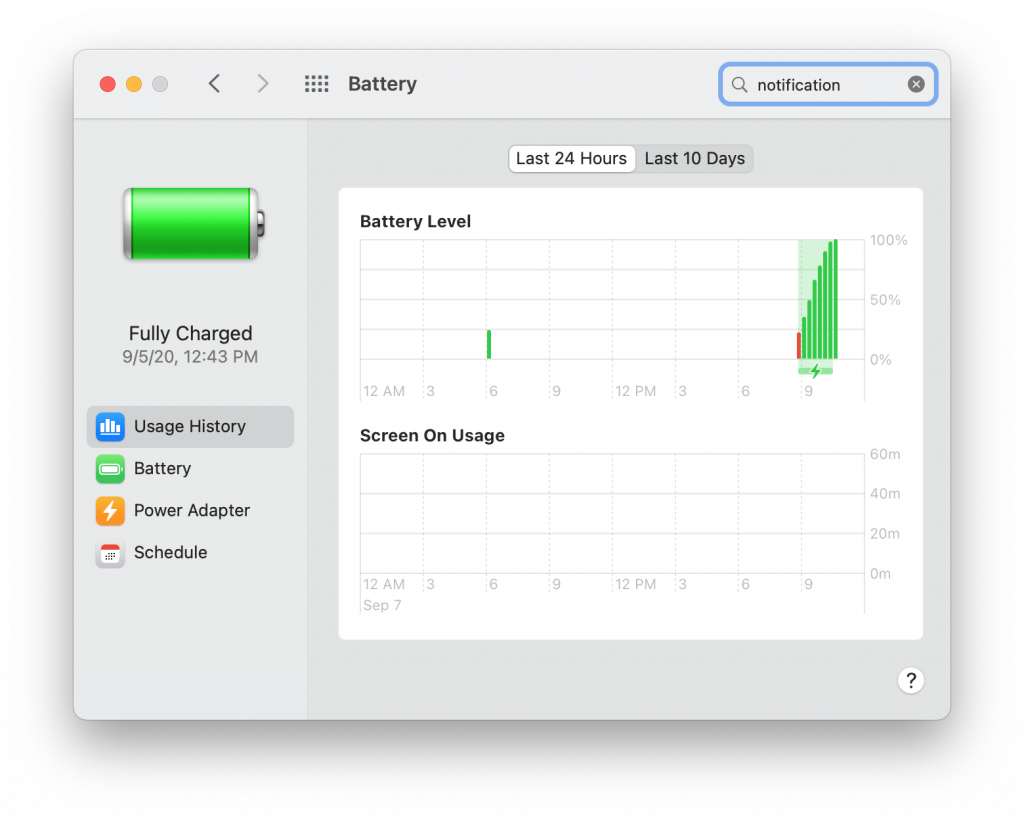

Eventually, though, the limitations of the hardware were making the hassle of keeping the system running and updated less worthwhile and I removed it from the cluster. Still not wanting to get rid of it, I decided to introduce it into my HiFi system as a way to play music using its built-in optical out (a feature that has been removed from recent Macs) to my receiver. Using optical into the receiver allows me to utilize the DAC that is present in the receiver rather than whatever my current solution is using. In theory, it should sound better. Anyway, this started my adventure in getting macOS running on an older Mac again, which was harder than I had anticipated.

Usually, installing macOS on a Mac is a straight forward affair, at least when the hardware is new. When using older hardware there are a few extra steps you may need to take to get things going. Installing El Capitan on my old MacBook required the following:

- External USB drive to install macOS onto

- USB flash drive to hold the installer files

- Carbon Copy Cloner

- Another Mac

- Install ISO

- Patience

The first issue I ran into is how to actually get an older version of macOS that runs on the machine. I no longer have the restore CD/DVD for the system, normally I keep these but for some reason, I’m missing the disc for this particular system. Since I had previous experience installing El Capitan on this Mac I knew there would be issued I’d need to overcome. To make it easier on myself I installed an even older version that I could then upgrade from. I also installed the OS onto an external drive so that I could complete a portion of the install using a different machine.

It is generally agreed upon that Mountain Lion was the last version of macOS (then called OS X) that was not intended to be installed on SSD based systems. Mountain Lion also not signed in a way that prevents it from being installed in 2020, an important issue as you’ll later see. After some searching, I found this as a source for the ISO file I needed to install Mountain Lion. Keep in mind that I am installing on a system with a blank hard drive, I needed to download the fully bootable ISO. The file I downloaded is specifically this one – https://sundryfiles.com/31KE. After downloading the file and using Etcher to copy the ISO to a USB flash drive, I was able to install Mountain Lion without any issues. With a fully working, if outdated, system up and running I moved on to tackling the El Capitan installation.

With the system running I took the necessary steps to get signed into the App Store. This alone is a small challenge because the App Store installed with Mountain Lion doesn’t know how to natively deal with the extra account protections Apple has introduced in recent years. Pay attention to the messaging on screen and it’ll tell you how to login (it amounts to putting your password plus the security code that appears on your phone or second Mac). Once logged in I downloaded the El Capitan installer to the disk.

After getting the installer I had to deal with the first issue. Which is, the installer will fail if there is no battery installed! The battery in my MacBook has been removed because it was beginning to swell. To be safe I removed it so it could be recycled rather than allow it to become a spicy pillow and burn down my house. If you attempt to install El Capitan to a Mac laptop with a battery installed you’ll get a cryptic error about a missing or invalid node. To fix this I removed the external drive from the machine and attached it to another Mac laptop I have that does have a battery. For safety, I also disconnected the internal hard drive prior to finishing the upgrade process.

The next issue I had to deal with was the fact that, while El Capitan is the newest version of macOS that will run on a 2008 MacBook, it is still from 2015. Being fully signed, it will fail to install in 2020 because the certificate used to sign the packages has since expired! To deal with this issue I followed the steps outlined at https://techsparx.com/computer-hardware/apple/macosx/install-osx-when-you-cant.html. Setting the date back worked great and I was able to finish the upgrade using the second Mac. Once the upgrade was done I moved to the external drive back to my 2008 MacBook and performed the final step.

The final step of the process is to move the installation from the external drive to the internal drive. My MacBook still has the original 256GB HD that was included with the system. It is very slow by today’s standards but will be just fine for its new use case. For this task, I turned to the excellent Carbon Copy Cloner. After cloning the external drive to the internal drive my installation of El Capitan was complete. I was then able to connect the laptop to my receiver using an optical cable and enjoy music!