I have been running this blog on this domain for over ten years now but the “hardware” has changed a bit. I have always done a VPS but where it lives has changed over time. I started with Rackspace and then later moved to Digital Ocean back when they were the new kid on the block and offered SSD based VPS instances with unlimited bandwidth. I started on a $5 droplet and then upgraded to a pair of $5 droplets so that I could get better separation of concerns and increase the total amount of compute I had at my disposal. This setup has served me very well for the past five years or so. If you are interested in checking out Digital Ocean I have a referral code you can use – https://m.do.co/c/5016d3cc9b25

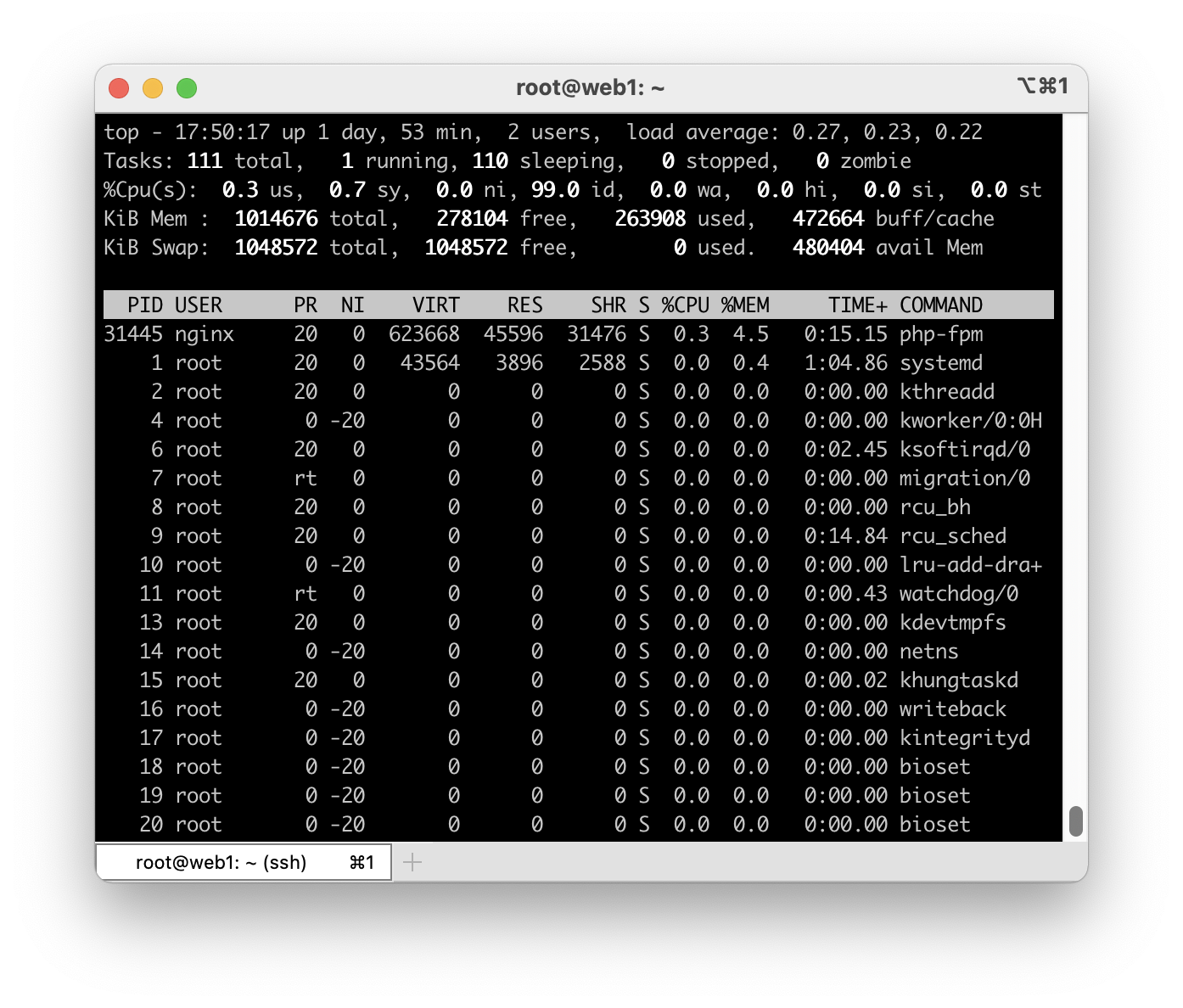

As of this writing, the site is hosted on two of the lowest level droplets Digital Ocean offers which cost $5 a month each. I use a pair of instances primarily because it is the cheapest way to get two vCPU worth of compute. I made the change to two instances back when I was running xboxrecord.us (XRU) as well as a NodeJS app. Xboxrecord.us and the associated NodeJS app (which also powered guardian.theater at the time), combined with MySQL, used more CPU than a single instance could provide. By adding a new instance and moving MySQL to it I was able to spread the load across the two instances quite well. I have since shutdown XRU and the NodeJS app but have kept the split server arrangement mostly because I haven’t wanted to spend the time moving it back to a single instance. Also, how I run WordPress is slightly different now because in addition to MySQL I am also running Redis. Four services (Nginx, PHP, Redis and MySQL) all competing for CPU time during requests is just a bit too much for a single core.

Making the dual server arrangement work is simple on Digital Ocean. The instance that runs MySQL also runs Redis for object and page caching for WordPress. This means Nginx and PHP gets its own CPU and MySQL and Redis get their own CPU for doing work. I am now effectively running a dual core system but with the added overhead, however small, of doing some work across the private network. Digital Ocean has offered private networking with no transfer fees between instances for awhile now so I utilize that move data between the two instances. Digital Ocean also has firewall functionality that I tap into to ensure the database server can only be reached by my web server. There is no public access to the database server at all.

The web server is, of course, publicly available. In front of this server is a floating IP, also provided by Digital Ocean. I use a floating IP so that I can create a new web server and then simply switch where the floating IP points so make it live. I don’t need to change any DNS and my cut overs are fairly clean. Floating IPs are free and I highly recommend always leverage floating IPs in front of an instance.

Although the server is publicly available, I don’t allow for direct access to the server. To help provide some level of protection I use Cloudflare in front of the site. I have used Cloudflare for almost as long as I’ve been on Digital Ocean and while I started out on their free plan I have since transitioned to using their Automatic Platform Optimization system for WordPress. This feature does cost $5 a month to enable but what it gives you, when combined with their plugin, is basically the perfect CDN solution for WordPress. I highly recommend this as well.

In all, hosting this site is about $15 a month. This is a bit steeper than some people may be willing to pay and I could certainly do it for less. That said, I have found this setup to be reliable and worry free. Digital Ocean is an excellent choice for hosting software and keeps getting better.

Running WordPress

WordPress, if you’re careful, is quite light weight by today’s standards. Out of the box it runs extremely quickly so I have always done what I could to ensure it stays that way so that I can keep the site as responsive as possible. While I do utilizing caching to keep things speedy you can never ignore uncached speeds. Uncached responsiveness will always be felt in the admin area and I don’t want a sluggish admin experience.

Keeping WordPress running smoothly is simple in theory and sometimes difficult in practice. In most cases, doing less is always the better option. For this reason I install and use as few plugins as necessary and use a pretty basic theme. My only requirement for the theme is that it looks reasonable while also being responsive (mobile friendly). Below is a listing of the plugins I use on this site.

Akismet

This plugin comes with WordPress. Many people know what this plugin is so I won’t get into it too much. It does what it can to detect and mark command spam as best it can and does a pretty good job of it these days.

Autoptimize

Autoptimize combines js and css files into single files as much as possible. This reduces the total number of requests required to load content. This fulfills my “less is more” requirement.

Autoshare for Twitter

Autoshare for Twitter is a plugin my current employer puts out. It does one thing and it does it extremely well. It shares new posts, when told to do so, directly to Twitter with the title of the post as well as a link to it. When I started I would do this manually. Autoshare for Twitter greatly simplifies this task. Twitter happens to be the only place I share new content to.

Batcache

Batcache is a simple page caching solution for WordPress for caching pages at the server. Pages that are served to anonymous users are stored in Redis, with memcache(d) also supported. Additional hits to server will be served out of the cache until the page expires. This may seem redundant since I have Cloudflare providing full page caching but caching at the server itself ensures that Cloudflare’s many points of presence get a consistent copy from the server.

Cloudflare

The Cloudflare plugin is good by itself but required if you are using their APO option for WordPress. With this plugin, API calls are made to Cloudlfare to clear the CDN cache when certain events happen in WordPress, like saving a new post.

Cookie Notice and Compliance

Cookie Notice and Compliance for that sweet GDPR compliance. Presents that annoying “we got cookies” notification.

Redis Object Cache

Redis Object Cache is my preferred object caching solution. I find Redis, combined with this plugin, to be the best object caching solution available for WordPress.

Site Kit by Google

Site Kit by Google, another plugin by my employer, is the best way to integrate some useful Google services, like Google Analytics and Google Adsense, into your WordPress site.

That is the complete set of plugins that are deployed and activated on my site. In addition to this smallish set of plugins I also employ another method to keep my site running as quickly as I can, which I described in Speed up WordPress with this one weird trick. These plugins, combined with the mentioned trick, ensure the backend remain as responsive as possible. New Relic reports that the typical, average response time of the site is under 200ms even if the traffic to the site is pretty low. This seems pretty good to me while using the most basic droplets Digital Ocean has to offer.

Do you host your own site? Leave a comment describing what your methods are for hosting your own site!